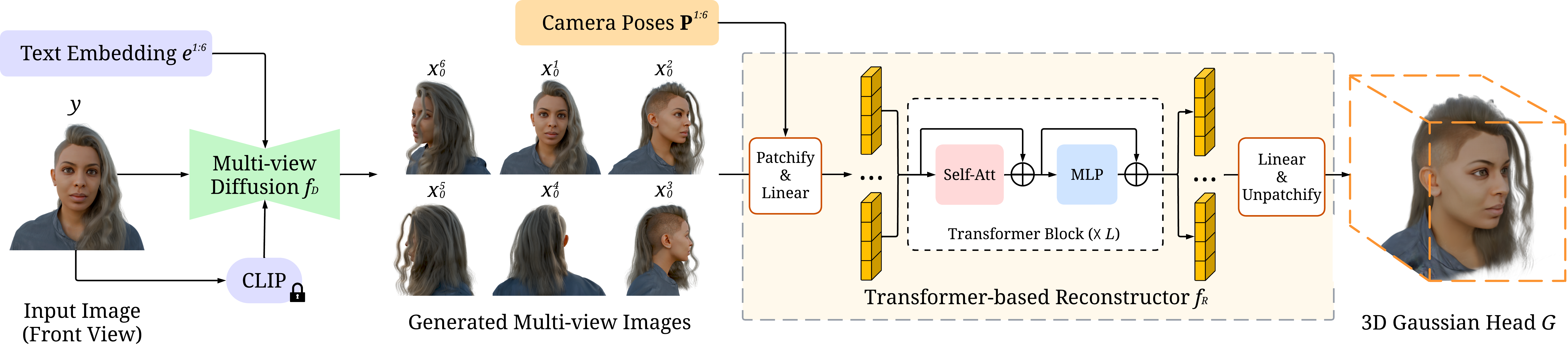

We present FaceLift, a novel feed-forward approach for generalizable,

high-quality 360-degree head reconstruction

from a single image. Our pipeline first employs

a multi-view latent diffusion model to generate consistent side and back views from a

single facial input, which then feed into a transformer-based reconstructor that produces

a comprehensive 3D Gaussian Splats representation. Previous methods for monocular 3D

face reconstruction often lack full view coverage or view consistency due to insufficient

multi-view supervision. We address this by creating a high-quality synthetic head dataset

that enables consistent supervision across viewpoints. To bridge the domain gap between

synthetic training data and real-world images, we propose a simple yet effective technique

that ensures the view-generation process maintains fidelity to the input by learning to

reconstruct the input image alongside view generation. Despite being trained exclusively

on synthetic data, our method demonstrates remarkable generalization to real-world images.

Through extensive qualitative and quantitative evaluations, we show that FaceLift

outperforms state-of-the-art 3D face reconstruction methods in identity preservation,

detail recovery, and rendering quality.